Paging through data in PHP, a novel approach

Page is part of Articles in which you can submit an article

written by owen on 2014-Jun-23.

While working on a php CMS project I decided to do a stress test on the page navigation code. I dumped 1.5 million records into a mysql table that stores the pages. What I discovered led me to research and develop a solution to the problem that resulted from having to generate pagin links for a table with so many records.

For the test case I was displaying 50 records per page. Using MYSQL’s LIMIT function to limit the amount of rows returned. Before I run the main query (Q1) I do a TOTALRECS=“select count(1) from mytable†to determine the total amount of records in the table so that I can generate page navigation below the results e.g. Pages: 1, 2, 3, 4……...10010, 10011, 10012 Next Page.

What resulted from inserting 1.5 million records is that the frontend php webpage started to take roughly 30 seconds to render initially (afterwards it ran fine). While limiting to 50 records displayed per page is fast (took 3 seconds), the query to count the total records for the paging navigation was slow (took 30 seconds). I do session cache the TOTALRECS value but the initial slow down to get the page navigation count is obvious since the whole page locks up for 30 seconds. Most developers never encounter this problem but I wanted to solve it using as little "magic" as possible. Avoiding magic fixes means portability of the solution between databases in high. Assuming that there might be 1.5 million records is crazy talk but not impossible for a busy new website.

In finding a solution to any obvious problem there are a few steps that I often take;

Is it a real problem?

Hmmm I assume that most people will never use for a CMS long enough time to amass 1.5 million records in their page table. But why would you make something that degrades the longer you use it? I’m looking at you iphone. Yes, it is a real problem. Not “near future†but nonetheless real.

After determining that I had a problem (lol) then I proceeded to do the research.

Check the internet

Most problems can be solved by a quick search of the internet. But in this case I realized that an answer to this question was not going to be easy to find. I see this all the time on the internet. Its a 2 fold problem. People who want specific answers to specific problems - meeting up with the people with no practical problem solving experience and have band-aid solutions that they spew off the top of their heads. These people are often not only wrong but also lack any kind of good situational analytical skills. You could provide 50 different questions they would provide the same answer. Its not productive. NO SOLUTION.

Throw some hardware at the problem

Bigger sites like facebook and Wikipedia tackle the problem with hardware. And one cannot assume that people are going to be able to afford to buy server farms in alaska just so they can run your software. One has to always code within limits. And my single core XP 1.5 mhz computer was it.

Optimize the table with Indexes

A few people had suggested this adding indexes to the table. However, the problem is not MYTABLE or indexes because the observant would have noticed that the main query with the limit comes back in 3 seconds. The total query is the one that takes 30 seconds because it has to do a full table scan. Indexing only solves problems for specific use cases on specific tables with WHERE conditions that match the indexes. Indexes can often be added after the fact and with a simple “select count(1) from mytable†there is pretty much nothing you can index to make that faster. A old article Optimized Pagination using MySQL suggest I use SQL_CALC_FOUND_ROWS which is MYSQL specific. Unfortunately this did not help much in this case and reeks of “magicâ€. Most of the other optimizations suggested by the article such as getting a total_rows table property but that was too specific to MYSQL and would not take the WHERE conditions into account. NOT A SOLUTION.

What is Everyone else doing

When the internets fail then you have to look around try to see how everyone else solves this problem;

Most websites do is not show a navigation at all or they have a "show more" button that allows for stepping through the results. By using a “show more†or infinite scrolling pages users are limited to only the current and next page. This is appropriate in certain situations but limiting to power users. I personally often find it annoying especially on tumblr (but it might improve readership). Either way most people in social media are not concerned with the past and so not being able to skip to old pages is not a major concern. NOT A SOLUTION.

Forum software such as phpbb have a search cache table which they use to store the search results that are returned. This results cache is faster to count because it will end up with less records than the main table but I am not a fan of cache tables. They need maintenance and would have to probably create allot of them. NOT A SOLUTION.

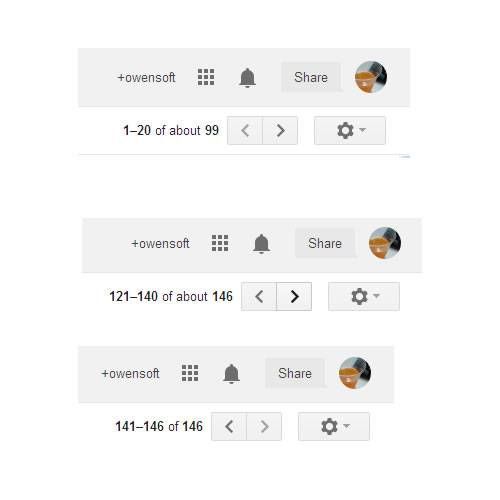

Then I remember Gmail. I had always noticed gmail had a weird and often annoying type of page navigation (pictured). SOLUTION! By not doing a total count gmail avoids having to do check how deep this rabbit hole goes while still having the paging functionality. Yes the user loses the jump to LAST page link but all things considered the navigation is quicker.

Final solution

With the gmail approach I could skip doing the total count all together. I would redesign my paging code to not depend on the total number of records in order to generate links. But how will I know if there is a next page or 10? This was a another problem. I had just saved 30 seconds but I did not want to have users clicking on the Page 2 link and seeing no results popup. What do I do? Solution: extend the limit of the original query.

Since the database has gone through all the effort of processing SELECT * FROM MYTABLE LIMIT 0, 50 why not just change the limit to 0, 150? This way I can leverage the power of the database and provide additional functionality to the user at a negligible cost.

Some may argue that selecting an extra 100 records in a waste of resources but when you consider that the time it takes to query 50 records and is almost the same it as it takes to query 150 (and certainly less that it takes to get TOTAL_COUNT) the trade off ends in a win win situation for me and a little less so for the user.

How to interpret the extra records returned

Assuming we are paging 50 records at a time I will limit the returned records to 50x3 which results in 150 results maximum. I pass this total returned number to the code that generates the page nav. If the query with the new extended limit returns;

- Less than page 50: I know I only have only have one page so there is no need to show navigation its all good. Unless I am not on page one (see off the edge).

- Less than 50 x 2 but greater than 50: I know I have only 1 extra page only.

- More than 50 x 2 but less than 50 x 3: I know I have 2 extra pages only.

- Exactly 50 x 3: I know I have definitely 2 extra pages and a possible infinite more.

Off the edge: If I get no results and I am on the first page then clearly I have no records at all and I don’t need any form of navigation. If I am on page 5000 for example and I get no results I have to make some assumptions;

- The user clicked a more link and hit the edge without me being able to detect it. In this case I know for certain that page 1, 2, 3 exist so I generate links to those pages.

- Pages greater than this page obviously wouldn’t exist so leave those out.

- There is a chance that the user might just have tried to hack in their own page link. Those people are on their own. If they are smart modify a URL they are smart enough to guess a new reasonable number and dont need further help.

- Note that I do not generate links for page 4990, 4991, 4992 etc because the last thing you want to do is give bots extra pointless links to crawl which might be just blank pages (that lead to other blanks that lead to other blanks, infinity). But I do give links back to page 1, 2, 3 since those would be most useful and most likely to exist.

- If the user navigated off the edge naturally then a simple press of the “back†button in the browser will get them back on track without being disoriented.

Conclusion

If you don’t have 1.5 million records to scroll through or have parameters to keep the total count small then you certainly will not need to go through all this work. But if you have a slowly growing table that might fluctuate in size over time then this might be a good solution to maintain performance and functionally of the paging with minimal side effects and no caching.

permanent link. Find similar posts in Articles.

comments

Comment list is empty. You should totally be the first to Post your comments on this article.